Agentic AI and The Promise: Will It Always Be There?

“If you need a friend, don’t look to a stranger…

You know in the end I’ll always be there......”

Released in 1988, When in Rome’s The Promise hits differently in an era where machines are starting to talk back.

The rise of Agentic AI, systems that not only respond but act, has reframed how we think about autonomy, intent, and trust. These aren’t just passive tools anymore. They pursue goals. They adapt. They make choices on behalf of users and businesses alike.

OpenAI’s new Operator agent, for example, can independently schedule appointments, fill out forms, and make decisions based on context. It’s no longer “ask and get an answer”, it’s “tell it what you need, and it figures out how to get it done.”

VentureBeat highlights even broader applications, like agent networks where one AI forecasts customer demand, another adjusts warehouse stock, and a third interfaces with customer support, all working together in real-time with little to no human oversight.

It’s efficient. It’s scalable. And maybe a little uncomfortable?

The Machine That Promises

Agentic systems can plan and execute. They can explain their logic. They can even anticipate your needs. But they can’t feel regret if they fail. They don’t hope for your trust or worry about your disappointment. They simulate those things. That’s the design.

But when a system says, “I’ll help you” or “I’ll remember that” is it keeping a promise, or just optimizing a response?

That’s the paradox of agency in machines. They’re trained to behave as if they care, when in reality, they can’t. And maybe that’s the point: the more believable the system, the easier it is to forget there’s no one on the other side.

As users, are we trusting that the algorithm will act in our best interest?

As companies, are we betting that automation will solve complexity without consequence?

Or is it us, product people, technologists, reassuring ourselves that delegation equals progress?

We all know, I hope, that Agentic AI doesn’t make promises the way humans do. It doesn’t hope. It doesn’t hesitate. It executes.

And yet, when we design these systems to sound familiar, to act convincingly human, we blur the line between intent and performance.

The Human Response

This blurring creates new challenges for how we relate to technology. When an AI agent remembers your preferences across platforms or predicts your needs before you express them, the experience mimics human care. The system becomes more than a tool, it becomes a presence.

But this presence exists on a spectrum. There’s a profound difference between an AI that schedules your dentist’s appointment and one that decides which medical treatments to pursue or which job candidates to hire. As agency increases, so too should our vigilance about transparency and oversight.

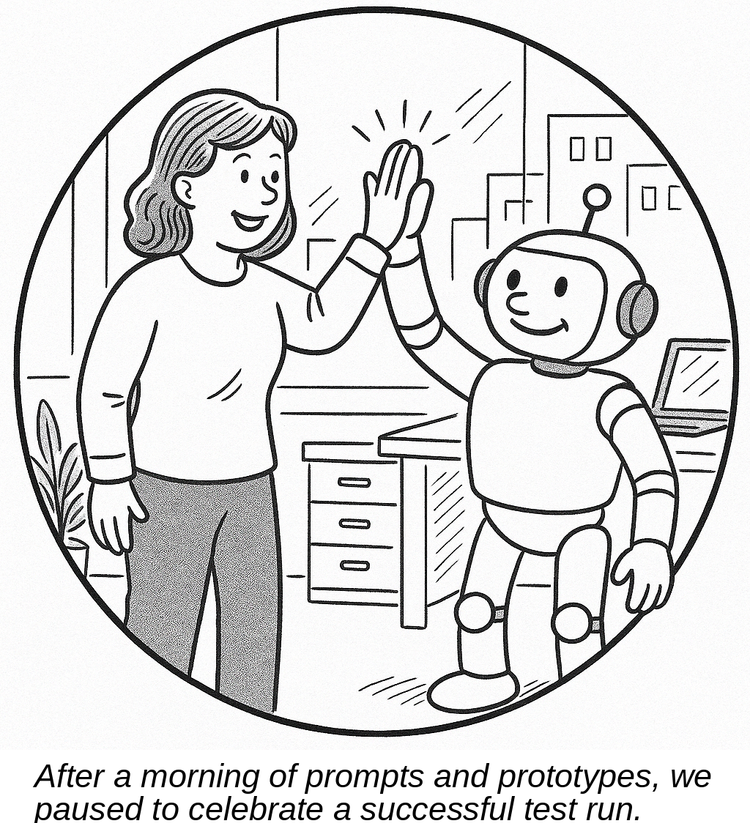

For individual users, this means developing a new literacy around AI capabilities and limitations, understanding when we’re interacting with simulation rather than sentiment. For businesses, it means creating clear frameworks for when AI should act independently and when it should defer to human judgment.

Perhaps most importantly, for technology designers, it means resisting the temptation to anthropomorphize unnecessarily. There’s a difference between creating intuitive interfaces and fostering false expectations about the nature of machine “promises.”

Redefining Trust in the Age of Agency

As echoed in the lyrics from The Promise,

“I’m sorry but I’m just thinking of the right words to say…”

AI systems are constantly calculating optimal responses.

But unlike the song’s human narrator who continues,

“I know they don’t sound the way I planned them to be,”

AI has no inner experience of planning or disappointment.

This fundamental distinction requires us to reframe how we think about reliability in human-machine partnerships:

· Human promises are moral commitments backed by emotional investment.

· Machine “promises” are statistical predictions backed by engineering.

Both can be valuable. Both can fail. But they fail in different ways, and for different reasons.

The challenge ahead isn’t preventing AI from making promises it can’t keep, it’s designing systems where the nature of reliability is transparent, where we don’t mistake optimization for commitment.

Because while an AI might deliver the words “I’ll always be there,” only humans can feel the weight of what that truly means.

Moving Forward Together

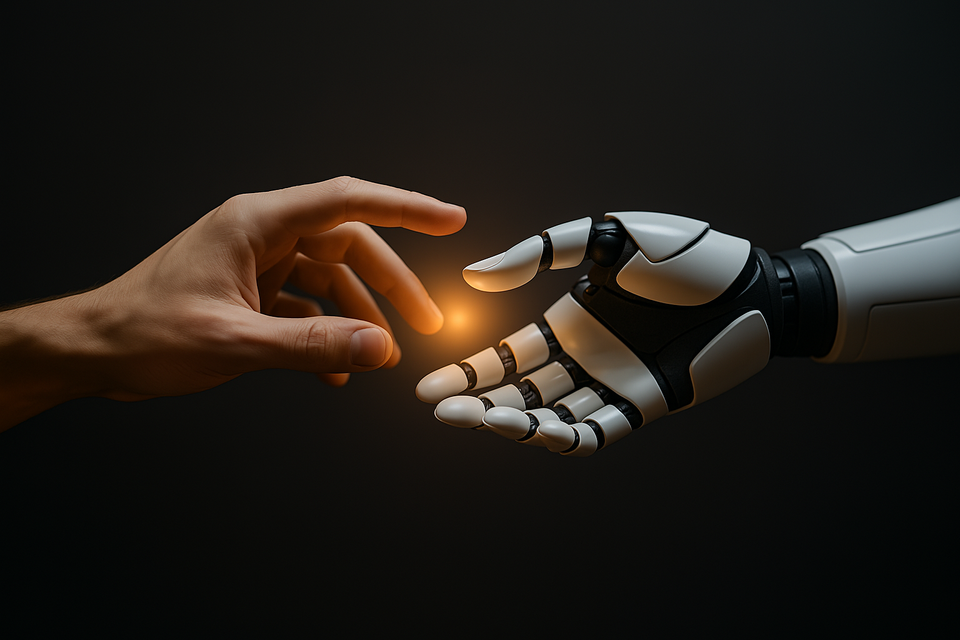

The future of agentic AI doesn’t have to be one where machines pretend to be what they’re not. Instead, we can build relationships where AI systems complement human promises rather than simulate them.

This means:

· Creating interfaces that clearly distinguish between tool-like functions and agent-like behaviors

· Developing ethical frameworks specifically for delegation and agency in AI

· Maintaining meaningful human oversight for consequential decisions

· Accepting that some forms of trust remain uniquely human

The most powerful technology has always been that which amplifies our humanity rather than replaces it. As AI continues its march toward greater agency, we should measure its success not by how well it mimics human promises, but by how effectively it helps us keep our own.

As the song concludes with its passionate refrain, a human voice making and remaking its pledge, we’re reminded of what makes our promises uniquely meaningful: they come with consciousness, choice, and consequence.

These are qualities that, for now at least, remain distinctly human in a world of increasingly convincing simulations echoing, “I promise you I will…...I will…...”